(in which a single comment substitutes for a butterfly’s wing, metaphorically speaking)

A week or so back , as part of the unfocused rant1 that stemmed from exposure to a particularly ghastly Excel travesty.

Since it struck a chord with at least one person2 and I’d been meaning for months to write something more about it, I’m going to ramble about my feeble (but still surprisingly useful) approach to VBA code metrics.

The original idea came from three contemporaneous events several years: a large and nasty workbook to be heavily modified, some reading about McCabe’s cyclomatic complexity metric and coming across a little VB utility named Oh No!

Oh No!

This utility scanned a VB project and displayed a chart that attempted to show you where possible problem areas might lie by using size and complexity of routines on its X and Y axes (Function, Sub, Property etc). Size was number on non-blank, non-comment lines, while complexity was (from the Help file):

The complexity is measured as the total number of structure statements in a procedure. These statements are: Do, For, If, ElseIf, Select, While and With.

It looked like this (pointed in this instance at its own source code):

The third dimension, “blob size”, was related to the number of parameters in the routine. All in all, it’s a nicely-done, well thought-out little app.

The third dimension, “blob size”, was related to the number of parameters in the routine. All in all, it’s a nicely-done, well thought-out little app.

For my uses it had its drawbacks, of course. To start with, it wanted a .vbp (VB project) file for input, something I didn’t have in my Excel/VBA project. For another, I didn’t seem to have the sort of brain that could easily extract useful information out of the graphical display. The code didn’t like continued lines, something I use a lot, and I felt that size and parameter count were contributors to complexity: we didn’t really need three dimensions.

Most important of all, writing my own app looked like a fun part-time project and I’m a complete sucker for those.

I should confess now that a cyclomatic complexity calculation proved to be beyond me when I developed this utility in 2002. It’s probably still beyond me now, but back then the reference I had was the original paper and gaining the necessary understanding from that would in all likelihood have taken all the fun out of the whole thing. So I fudged it. Sue me.

Approaching the problem

The VBComponents of the target workbook are scanned and a collection of modules is constructed, each of which contains a separate object for each procedure2 it contains. As procedures are constructed from lines, they attempt to classify what they’re being presented and tally up the incidences of things worth counting:

Public Enum eCounterType

ecComments = 1

ecDeclares = 2

ecElseIfs = 3

ecGotos = 4

ecHighestNestLevel = 5

ecIifs = 6

ecIfs = 7

ecLines = 8

ecLongLines = 9

ecLoops = 10

ecMultiDimCount = 11

ecOneCharVars = 12

ecParameters = 13

ecSelects = 14

ecWiths = 15

End Enum

You may have spotted that the deepest nesting is also recorded, although it’s not exactly a “counter” – perhaps I should have used “scoreCard” or something more expressive.

Each procedure gets a complexity “score”, which ended up being calculated, after much tweaking, using a tariff something like this:

| Pts |

Awarded for |

| 1.0 |

every “nesting” command (If, Do, For, Select, ElseIf, etc) |

| 0.1 |

each line of executable code after the first, rounded down |

| 0.1 |

each declared variableafter the first, rounded down |

| 1.0 |

each line that contains multiple Dims |

| 0.5 |

each parameter after the first |

| 2.0 |

each If (more than loops because of the arbitrarily complex branching) |

| 0.2 |

each long line (defined as 60 chars or more, after concatenating continued lines) |

| 1.0 |

each Go To |

| special |

the square of the deepest nesting level encountered |

.

It’s not supposed to be exact and it doesn’t need to be: the purpose is to give a quick sanity check and, particularly when encountering new code for the first time, an idea of where problem areas may lie.

There are deficiencies: I don’t think the code handles one-line If/End Ifs correctly – I believe it incorrectly increments the nesting level, which leads to a higher score. Since I really don’t like one-line Ifs, that’s just going to increase the chance that I see them, so I was happy to leave it like that.

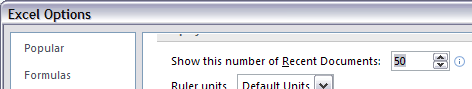

If you look at the output, there are other metrics: information on the whole project, module-level summaries (if you see 300 global variables you might consider that worth investigating, for example).

There’s no penalty for “Static” declarations, probably because I hadn’t stumbled across any code that thought it was a good idea. I’d put a fairly hefty tariff on that: it’s a major obstacle to the unravelling process.

Another “drawback”: there’s no real parser – a better approach might have been to write one and utilise an AST (the very useful roodi library for Ruby uses one and can report a “proper” cyclomatic complexity metric as a result). Also, I didn’t use regular expressions – I probably hadn’t encountered them (or was still frightened) at that time: it would have made some of the line-parsing a good deal more terse.

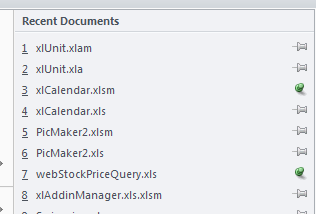

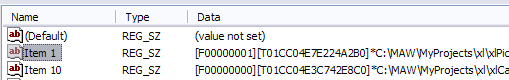

I called it “LOCutus” – “LOC” for “lines of code”, the balance presumably indicating that the Borg were on my mind at the time. For better or worse, I’ve added it to the xlVBADevTools download (in a ZIP file with xlUnit). It’s an Excel XP file – I don’t think I’ve ever tested on XL2007 or higher, since I’ve yet to work at a site where it was used and prefer not to look at large crappy Excel apps without being paid for it…

If you download it, you’ll see that the report is showing the results of a scan on the app’s own code (shown as a HTML table below, so formatting may vary slightly):

| VBProc (Module) |

|

|

Globals |

Members |

Procs |

|

|

|

|

|

|

|

|

0 |

7 |

21 |

|

|

|

|

|

| Procedure |

Lines |

Max nest |

Comments |

Declares |

Ifs |

Loops |

Long lines |

Go Tos |

OCV |

X |

| Load |

8 |

2 |

0 |

3 |

1 |

1 |

0 |

0 |

0 |

9 |

| ProcessLine |

20 |

1 |

0 |

1 |

5 |

0 |

0 |

0 |

0 |

12 |

| CheckForOneCharVars |

7 |

2 |

0 |

1 |

1 |

1 |

0 |

0 |

0 |

8 |

| CountParameters |

14 |

3 |

0 |

4 |

3 |

1 |

0 |

0 |

0 |

17 |

.

The “worst” proc scores 17, largely due to its 3-level nesting. If I were to have a need to change it, I’d consider breaking out the inner loop (or taking the outermost If and refactoring into a guard clause). Seventeen isn’t generally high enough to make me concerned though.

That’s the most “formal” part of the diagnostic “toolkit” that I apply to strange new Excel spreadsheets – anyone out there have something else we could look at?

xlVBADevTool download

1 Aren’t they all? You should hear me around the office. Probably not, actually.

2 OK, exactly one.

3 These days I favour “routine” as a generic term for Function/Sub/Property or whatever. Times change.